On Bandits and Swipes – Gamification of Search

Stefan Otte

Active Learning or: How I Learned to Stop Worrying and Love Small Data

Stefan Otte

"Nothing Is Quite So Practical as a Good Theory"

– Kurt Lewin

Active Learning

"The key idea behind active learning is that a machine learning algorithm can achieve greater accuracy with fewer training labels if it is allowed to choose the data from which it learns.

An active learner may pose queries, usually in the form of unlabeled data instances to be labeled by an oracle (e.g., a human annotator).

Active learning is well-motivated in many modern machine learning problems, where unlabeled data may be abundant or easily obtained, but labels are difficult, time-consuming, or expensive to obtain."

– Burr Settles, Active Learning Literature Survey

greater accuracy with fewer training labels

→ "good dataTM"

actively query for data

→ sequential decision making

\({\huge \textbf{X} \rightarrow} \begin{bmatrix} cat\\ dog\\ \vdots\\ cat \end{bmatrix}\)

\({\huge \textbf{X} \rightarrow} \begin{bmatrix} ?\\ ?\\ \vdots\\ ? \end{bmatrix}\)

What is Interesting?

What is Interesting?

- uncertainty

- least confident

- margin

- entropy

- query-by-committee

- expected model change (decision theory)

- expected error reduction

- expected variance reduction

- …

Gamification of Search

Multi-Armed Bandits

Problem statement

- Find a multi-armed bandit

- Play arms using bandit theory

- Profit $$$

Problem statement

- given a bandit with \(n\) arms

- each arm \(i \in {1,\dots,n}\) returns reward

\[y \sim P(y; \theta_i)\]

Goal: Find a policy that \[\max \sum_{t=1}^T y_t\]

UCB

past performance + exploration bonus

UCB1

Play each bandit once

Then play bandit that \[\Large \arg\max_i \; \bar\mu_i + \sqrt{\frac{2\ln n}{n_i}}\]

- \(\bar\mu_i\): mean reward of bandit \(i\)

- \(n\): total rounds played

- \(n_i\): rounds bandit \(i\) was played

Demo

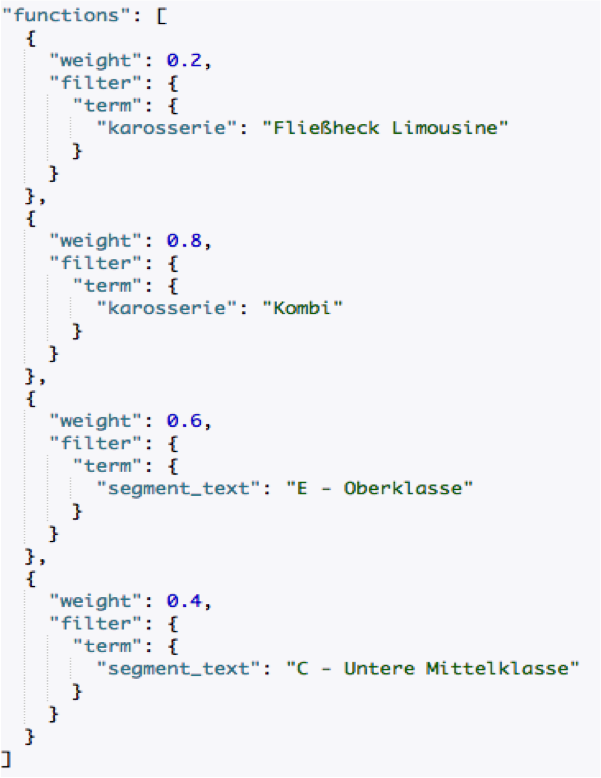

One Bandit per Feature

- brand bandit

- car body bandit

- segment bandit

Ranking with Elasticsearch

Popularity Bias

Practical Remarks

- Python all the way down ;D

- sklearn

- Flask REST API

- Elasticsearch

Conclusion

Active Learning or: How I Learned to Stop Worrying and Love Small Data

Related Topics

- Sequential Decision Making

- Global Optimizaiton

- Experimental Design

- (Bayesian) Reinforcement Learning

- Optimal solution exists: planning in belief space, but is infeasible

- Tuning hyperparams with Hyperband

Thanks!

Questions?

Stefan Otte